Max Coenen, M. Sc.

Main Supervisor: F. Rottensteiner; Co-Supervisor: C. Heipke

The highly dynamic nature of street environments is one of the biggest challenges for autonomous driving. The precise reconstruction of moving objects, especially of other cars, is fundamental to ensure safe navigation and to enable applications such as interactive motion planning and collaborative positioning. To this end, cameras provide a cost-effective solution to deliver perceptive data of a vehicle’s surroundings. With this background, this project is mainly based on stereo images acquired by stereo camera rigs mounted on moving vehicles as observations and has the goal to detect other sensor nodes, i.e. other vehicles in this case, and to determine their relative poses. This information, termed as dynamic control information, can be used as input for collaborative positioning approaches.

The reconstruction of the 3D pose and shape of objects from images is an ill-posed problem, since the projection from 3D to 2D images leaves many ambiguities about 3D objects. A common way to object reconstruction is to make use of deformable shape models as shape prior and to align them with the object in the image, e.g. by matching entities such as surfaces, keypoints, edges, or contours of the deformable 3D model to their corresponding entities inferred from the image. In this project, we make use of such a deformable vehicle model and present a method that reconstructs vehicles in 3D given street level stereo image pairs, allowing the derivation of precise 3D pose and shape parameters. Based on initially detected vehicles, using a state-of-the-art detection approach, the contributions of this project are described as follows.

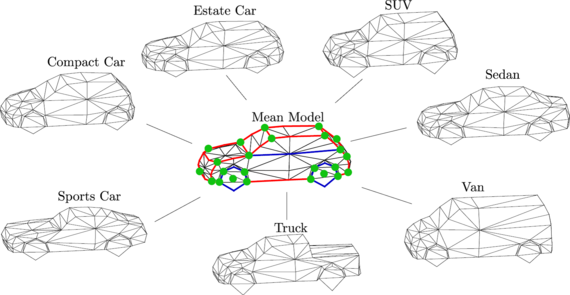

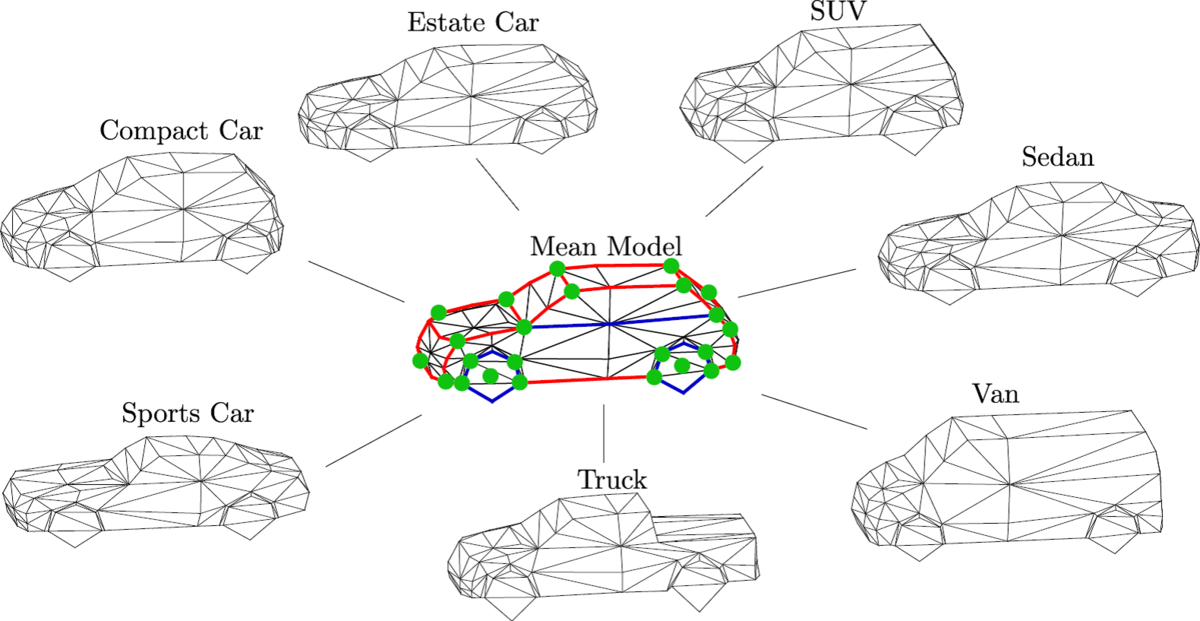

A subcategory-aware Active Shape Model (ASM) is proposed as deformable representation for shape of vehicles. In contrast to the classical ASM formulation, shape priors are learned for each of a set of distinguished vehicle types instead of for the whole category ‘vehicle’ in order to allow more precise and realistic constraints on the shape parameters. Model deformations corresponding to different vehicle types are shown in Fig. 1.

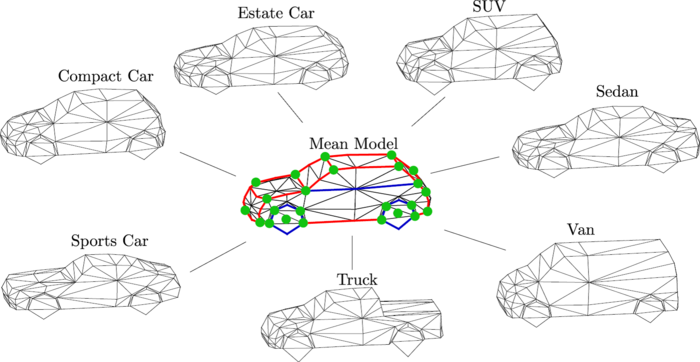

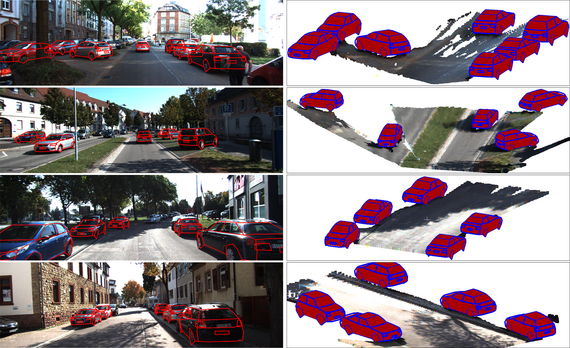

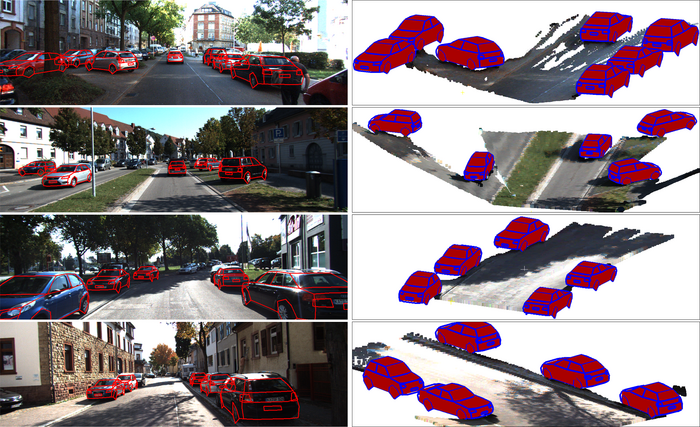

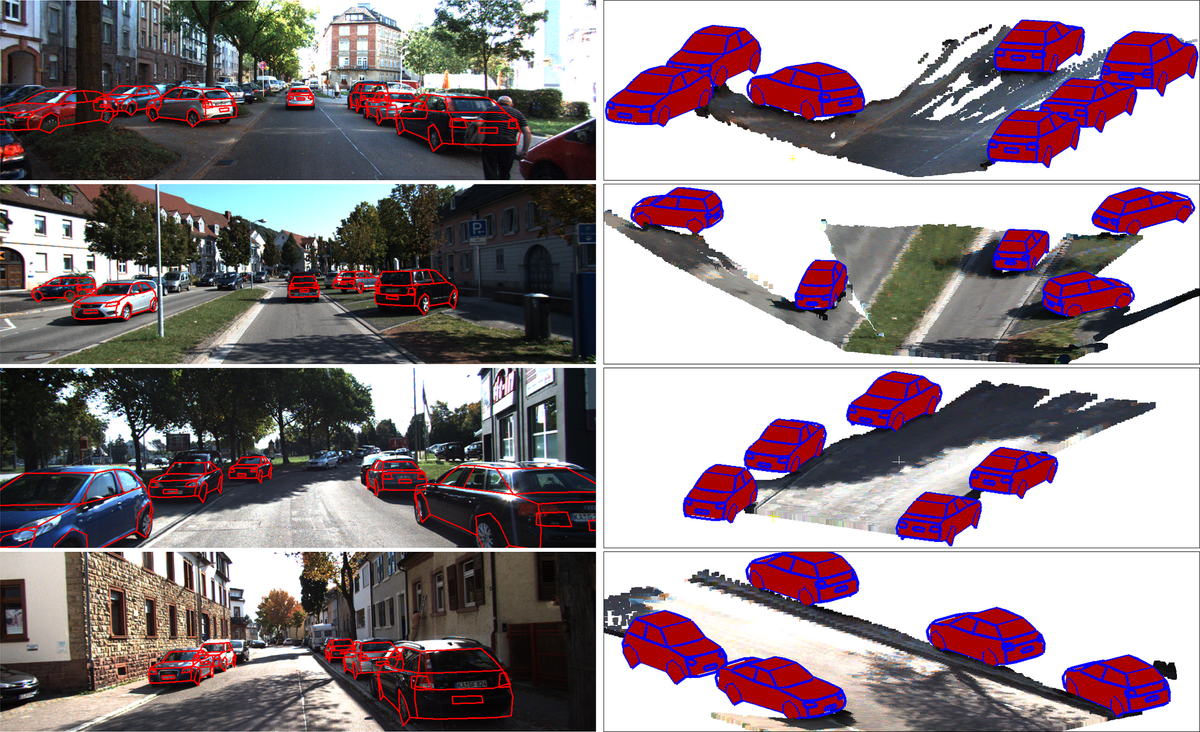

A novel multi-task convolutional neural network (CNN) is developed that simultaneously detects vehicle keypoints and vehicle wireframe edges (cf. Fig. 2) and also predicts a probability distribution for the vehicle’s orientation as well as the vehicle’s type (e.g. compact car, estate car, sedan, van, etc.). For the orientation estimation, a novel hierarchical class and classifier structure, and a novel loss for the detection of keypoints and wireframes, are defined. For the purpose of vehicle reconstruction, a comprehensive probabilistic model is formulated, mainly based on the outputs of the multi-task CNN but also on 3D and scene information derived from the stereo data. More specifically, the probabilistic model incorporates multiple likelihood functions, simultaneously fitting the surface of the deformable 3D model to stereo reconstructed 3D points, matching model keypoints to the detected keypoints, and aligning the model wireframe to the wireframe inferred by the CNN, respectively (cf. Fig. 2).

In addition to the observation likelihoods mentioned so far, state prior terms, based on inferred scene knowledge as well as on the probability distributions for orientation and vehicle type derived by the CNN, are used to regularize the determination of the target pose and shape parameters in the probabilistic model.

The evaluation of the developed method is performed on publicly available benchmark data and on our own data set recorded in the context of the i.c.sens mapathons. The latter was created by labelling parts of the data acquired during the mapathons by manually fitting CAD models to the observations, thus generating reference data for the evaluation of this project. The results show that, depending on the level of occlusion, an amount of up to 98.9% of correctly estimated orientations and up to 80.6% of correctly determined positions can be achieved by our method. The average errors result to 3.1° for orientation and 33 cm for position, respectively. Qualitative Results are shown in Fig. 3.

Nienburger Str. 1

30167 Hannover

Nienburger Str. 1

30167 Hannover