Improved Perception of the Environment using Convolutional Neural Networks

Dinh Tuan Nguyen, M. Sc.

Erstbetreuer: F. Rottensteiner; Co-Betreuer: C. Brenner

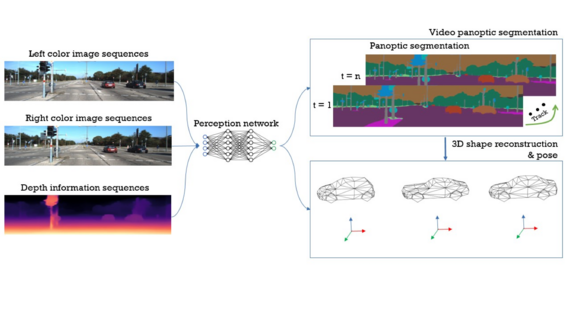

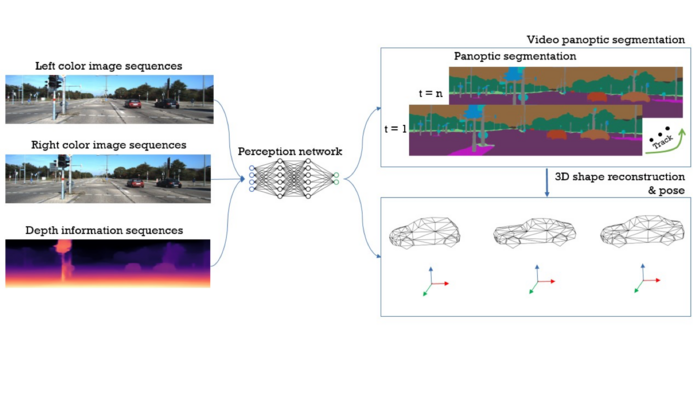

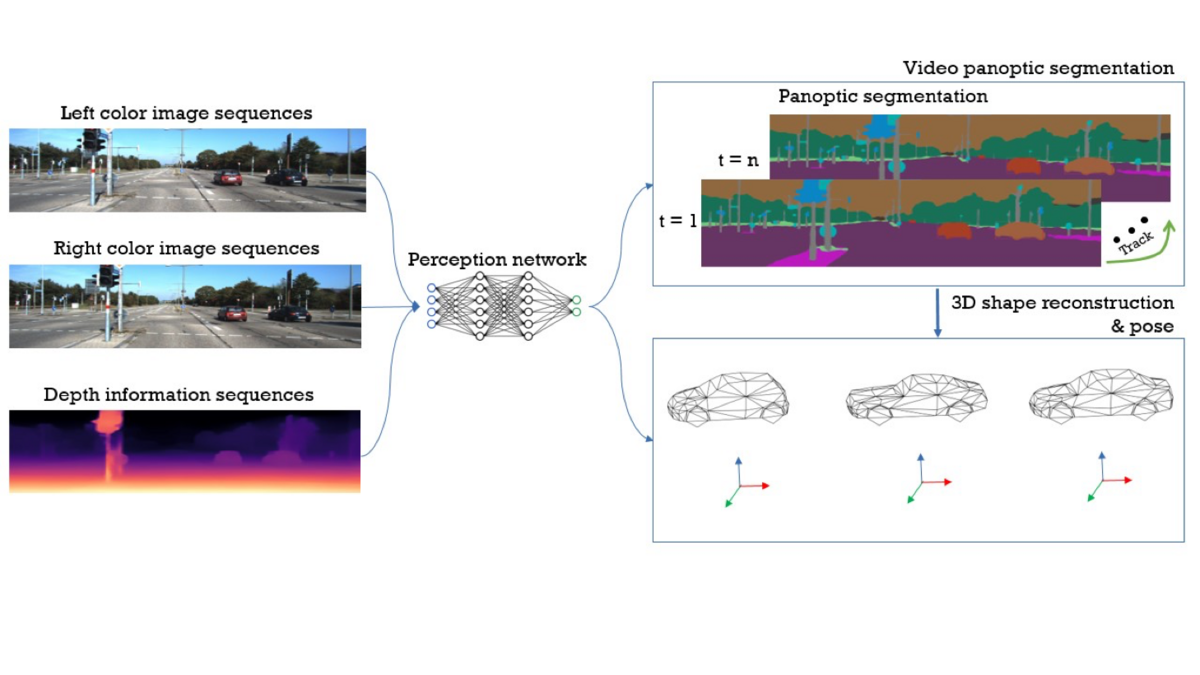

This project aims at the development of methods for improving collaborative positioning of vehicles in areas without adequate GNSS coverage on the basis of stereo image sequences. Focusing on an improvement of the perception of the environment, the poses and shapes of vehicles in the surrounding of the ego-position are of particular interest. Thus, the main goal of this project is to develop a CNN-based method that integrates the detection and semantic classification of objects via panoptic segmentation with the reconstruction of the 3D geometry of these objects. Assuming that the scene is dynamic, with respect to ego-motion as well as moving objects, temporal information is considered to be beneficial and is taken into account in the developed method.

Panoptic segmentation can be seen as the combination of semantic segmentation and instance segmentation: every pixel of the input image is assigned a class label, which corresponds to semantic segmentation; however, there are two groups of classes (stuff and things classes), and for the things classes, the identification of individual instances of the related objects are to be determined. In the context of i.c.sens, the overall program this project is part of (see below for details), the stuff classes correspond to objects such as the ground, building facades or trees, whereas the things classes correspond to objects such as vehicles or pedestrians. Video panoptic segmentation extends this concept by additionally propagating the obtained information across neighboring frames, thus defining panoptic segmentation as a function of time. This ensures that a specific object identified at frame t-1 gets assigned the same ID at frame t. However, most methods from the literature consider monoscopic image sequences only, not making use of depth information, and require some post-processing of the CNN outputs to obtain the temporally consistent representation of the panoptic information. Thus, these methods or similar ones have to be extended to cope with depth maps derived from stereo as an additional input and to improve the accuracy and avoid the post-processing efforts. In addition, for selected things classes, e.g. cars, the CNN should additionally predict the shape and pose parameters describing these objects in 3D, extending earlier work conducted in i.c.sens to a method that is capable of end-to-end learning (see Figure 1).

In order to evaluate the proposed method, we will compare it with state-of-the-art RGB and RGB-D methods using available segmentation related datasets like Kitti and Cityscapes. However, as there is a shortage of datasets with a reference for 3D shape reconstruction, we will also collect a new dataset in the context of an i.c.sens Mapathon. This dataset will also include a reference for 3D shape reconstruction to validate the proposed method.

Nienburger Str. 1

30167 Hannover