Supervisor: apl. Prof. Dr. techn. Rottensteiner

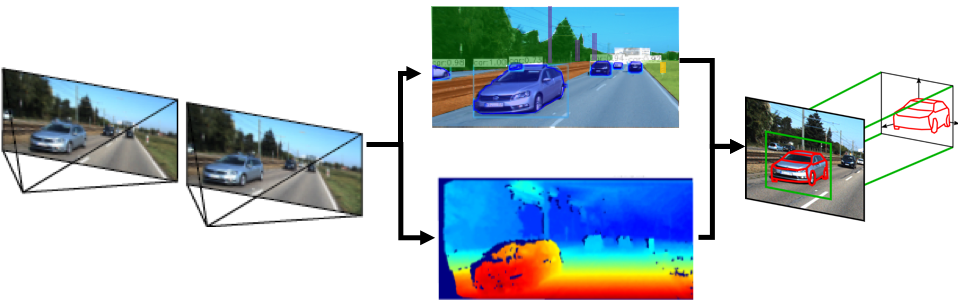

This project aims at the development of methods for improving the prospects of collaborative positioning of vehicles in areas without adequate GNSS coverage on the basis of stereo imagery. The focus is on an improvement of the perception of the environment based on convolutional neural networks (CNN). The main goal of this PhD project is to develop a CNN that integrates two tasks that are usually separated: the 3D reconstruction of a dense point cloud from stereo data and the semantic interpretation of the scene in the form of a panoptic segmentation. Panoptic segmentation can be seen as the combination of semantic segmentation and instance segmentation [Kirillov et al., 2019]: every pixel of the input image is assigned a class label, which corresponds to semantic segmentation; however, there are two groups of classes (stuff and things classes), and for the things classes, the identification of individual instances of the related objects are to be determined. In the context of i.c.sens, the stuff classes correspond to objects such as the ground, building facades or trees, whereas the things classes correspond to objects such as vehicles or pedestrians. By integrating panoptic segmentation with 3D reconstruction, the results of both tasks are expected to be improved. This part of the project extends earlier work on CNN-based 3D reconstruction carried out in i.c.sens [Mehltretter & Heipke, 2020]. As a secondary goal, the CNN could be extended to additionally predict information related to the detected vehicles, in particular parameters describing the shape and, potentially, the pose of the vehicle, extending previous work conducted in i.c.sens [Coenen & Rottensteiner, 2021]. The results obtained by these methods can be used to support collaborative localisation. On the one hand, the semantic information contained in the stuff classes could support the assignment of points derived from stereo images to existing data such as a coarse 3D city model, which can provide control information for location [Unger, 2020]; on the other hand, improving the methods for detecting and reconstructing the shapes and poses of other vehicles can provide additional and / or better vehicle-to-vehicle observations [García Fernández, 2020; Trusheim et al., 2021].

References

Coenen, M., Rottensteiner, F., 2021: Pose estimation and 3D reconstruction of vehicles from stereo-images using a subcategory-aware shape prior. ISPRS Journal of Photogrammetry and Remote Sensing (181), 27-47. DOI: 10.1016/j.isprsjprs.2021.07.006

Garcia-Fernandez, N., 2020: Simulation framework for collaborative navigation: Development - Analysis - Optimization. PhD thesis, Institute of Geodesy, Leibniz University Hannover, Germany, German Geodetic Commission, Series C (Dissertations), Nr. 855.

Kirilov, A., He, K., Girshick, R., Rother, C., Dollár, P., 2019: Panoptic segmentation. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 9396-9405. DOI: 10.1109/CVPR.2019.00963.

Mehltretter M., Heipke C., 2021: Aleatoric uncertainty estimation for dense stereo matching via CNN-based cost volume analysis. ISPRS Journal of Photogrammetry and Remote Sensing (171), 63-75. DOI: 10.1016/j.isprsjprs.2020.11.003

Trusheim P., Chen Y., Rottensteiner F., Heipke C., 2021: Cooperative localisation using image sensors in a dynamic traffic scenario. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences XLIII-B1-2021, pp. 117-124. DOI: 10.5194/isprs-archives-XLIII-B1-2021-117-2021

Unger, J., 2020: Integrated estimation of UAV image orientation with a generalised building model. PhD thesis, Institute of Photogrammetry and GeoInformation, Leibniz University Hannover, Germany, Wissenschaftliche Arbeiten der Fachrichtung Geodäsie und Geoinformatik der Leibniz Universität Hannover, Nr. 361.